Thales | Security for What Matters Most

More About This Author >

Thales | Security for What Matters Most

More About This Author >

Thales | Security for What Matters Most

More About This Author >

Thales | Security for What Matters Most

More About This Author >

AI has slipped into the boardroom, the clinic, the factory floor, and the classroom. It is not an experiment anymore; it is part of the infrastructure. Decisions once made by people are now shaped by algorithms. This boosts speed, but it also creates risk.

Data leaks through prompts. Training sets get poisoned. Shadow AI grows in the dark.

For all AI’s efficiency, without control, it delivers exposure, and the first place to look is not the model but the data beneath it.

To secure AI, you must start by securing the data that fuels it. The models, the algorithms, the pipelines. All of this depends on data. If the foundation is weak, every layer above it becomes fragile. This is where Data Security Posture Management, or DSPM, has a central role to play.

The AI Security Challenge Starts with Data

A recent report, based on approximately 7 million workers, finds that 71.7% of AI tools in the workplace are high or critical risk. Moreover, 83.8% of enterprise data flowing into AI systems goes into these risky tools. It also reports that employees are increasingly inputting sensitive data into these tools: almost 35% of corporate data going into AI is sensitive, up from approximately 11% two years ago.

Behaviors like these create several data security risks:

Sensitive data leakage.

Employees may inadvertently feed PII, financial records, or intellectual property into AI tools. Once this data enters a training dataset, it may shape outputs in ways that reveal more than intended, or worse, be exposed to third parties. Once released, sensitive data cannot simply be recalled.

Shadow AI.

Business units often deploy models independently, and employees experiment with AI tools without governance. A report by Microsoft said that 71% of UK workers use unsanctioned AI tools at work, with over half doing so weekly. There is no audit trail, no consistent policy, and little oversight. Each shadow AI project expands the risk surface, turning well-intentioned experimentation into potential exposure.

Data poisoning.

Malefactors (or careless insiders) can manipulate datasets, introducing errors, bias, or malicious patterns. Even simple mistakes by users when curating or uploading data can poison a model. AI trained on poisoned data produces inaccurate or dangerous results, and as reliance on AI-driven decisions grows, so do the consequences.

Credential sprawl.

Users create keys, APIs, and secrets across cloud services, data lakes, and repositories. Each additional credential is a potential vulnerability. Bad actors know this. Compromised credentials remain one of the leading causes of data breaches, and AI pipelines are no exception.

Without visibility into where data lives, how it is being used, and how employees interact with it, AI quickly shifts from being an asset to a liability. Understanding and governing user behavior around AI is just as critical as securing the data itself.

80%

of respondents do not feel highly confident in their ability to identify high-risk data sources

– Cloud Security Alliance: Understanding Data Security Risk 2025

DSPM As the First Line of Protection

This is where DSPM becomes critical. Its core functions map directly to the challenges associated with AI data protection.

Data Discovery For AI Security

DSPM automates the process of locating sensitive data. It identifies structured information like databases and unstructured content like documents, emails, or media. For AI, this means you can prevent regulated or high-risk data from entering training sets in the first place.

Access Controls and Monitoring

DSPM allows you to enforce policies on who can feed or query AI systems. It also monitors those interactions continuously. Misconfigurations are flagged. Excessive or inappropriate permissions are detected. The principle of least privilege becomes enforceable.

Credential and Secrets Management

DSPM secures encryption keys, secrets, and associated metadata. It helps reduce sprawl and centralizes oversight. This is not only about today’s risks. It also supports crypto agility, preparing organizations for the post-quantum era when existing algorithms may fail.

Usage Tracking and Behavioral Analytics

DSPM observes how data is used. It detects anomalies such as sudden spikes in AI queries, unusual data transfers, or abnormal prompt activity. It builds a behavioral baseline and alerts when something diverges. For AI data security, this matters because misuse can be subtle.

Posture Assessment

DSPM benchmarks your security controls. It provides risk scores. It identifies gaps before attackers exploit them. Before you connect sensitive data to AI models, you know whether your posture is strong enough. That knowledge is the difference between proactive defense and reactive clean-up.

How DSPM Strengthens AI Trustworthiness

Beyond protection, DSPM strengthens the trustworthiness of AI itself.

- Regulatory compliance: Regulations like GDPR, HIPAA, and the EU AI Act demand clear evidence of control over data. DSPM generates the audit trails, the reporting, and the assurance you need to show compliance. For boards and regulators alike, that visibility matters.

- Brand trust: A single leak of sensitive training data can damage years of hard-won reputation. Customers and partners need assurance that their information is safe. DSPM helps prevent those leaks and safeguards trust.

- Responsible AI adoption: Data governance for AI should not be an afterthought. By placing DSPM at the center, you build governance into the lifecycle of AI. Policies are consistent, oversight is real, and decisions are traceable. That makes AI adoption more sustainable.

- Future resilience: Threats evolve. Post-quantum computing could render today’s encryption obsolete. AI itself enables more sophisticated insider threats and social engineering. DSPM does not stop at today’s threats. It prepares you for what comes next by embedding agility and continuous monitoring into your operations.

Why DSP And DSPM Belong Together

It is worth noting that DSPM does not operate in isolation. A Data Security Platform (DSP) provides encryption, tokenization, and strong enforcement of protective controls. DSPM, on the other hand, delivers visibility, discovery, and posture management.

In the AI era, both are necessary. DSP ensures that sensitive data in AI models is protected at rest and in motion. DSPM ensures that you can see where that data is, how it is being accessed, and whether controls are sufficient. One enforces, the other reveals. Together, they provide a closed loop of discovery, protection, and monitoring.

Many entities currently rely on a patchwork of four or more separate tools for managing data risks. That fragmentation breeds inefficiency and inconsistency. An integrated approach combining DSP and DSPM closes the gaps. For AI security, that integration is not optional. It is essential.

Building A Proactive Risk Posture

Too many organizations still approach security reactively. Compliance drives activity, while checklists become the focus. Yet compliance alone is not enough. Threats move faster than regulation.

The survey work from the Cloud Security Alliance underscores this. Some 80% of respondents said they lacked confidence in identifying high-risk data sources. Another 54% admitted to operating with manual or semi-automated processes, and 31% lacked the tools to identify their riskiest data sources at all.

This lack of visibility translates into exposure. Attackers exploit it. AI systems built on such shaky ground inherit the weaknesses.

A proactive risk posture demands more. It needs continuous monitoring, automated discovery, and analytics that flag exposure before it is too late. DSPM brings those capabilities, transforming visibility into action.

AI Security Starts With Data

AI is not a side project anymore. It is becoming a core capability of modern business. But with scale comes risk. And at the heart of that risk is data.

You cannot secure AI if you cannot secure the data that drives it. Models trained on unclassified or poisoned data will betray you. Pipelines built on scattered credentials will be breached. Shadow AI will slip through cracks in governance.

DSPM provides the visibility, control, and governance required to protect AI systems. It discovers sensitive data, enforces access discipline, secures secrets, tracks usage, and assesses posture. It helps you comply with regulation, protect brand trust, and prepare for future threats.

For leaders, the message is, if you want to secure AI, begin with data. DSPM should be your first line of protection.

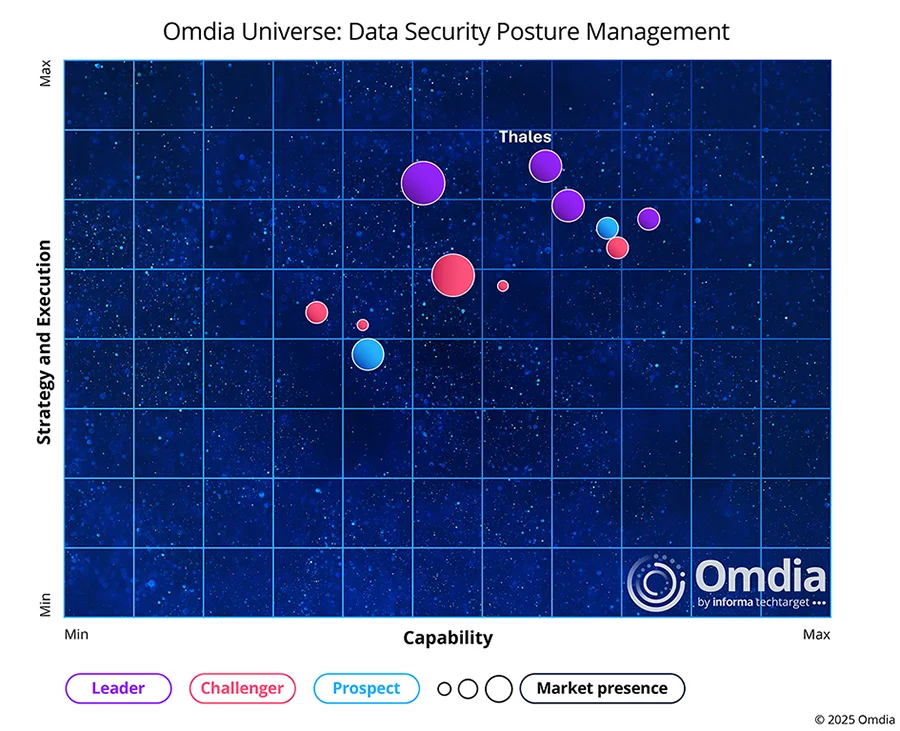

Thales offers solutions that integrate DSPM into your broader data security strategy. Thales DSPM is designed to give you the visibility and control you need. To explore how it can help you secure the data that fuels your AI, visit the Thales DSPM solution page.