This was my first year at Sapphire, which I attended as a result of a joint project that SAP Co-Innovation Labs (COIL), Vormetric, Intel and Virtustream have been collaborating on for the past few months to secure HANA in the cloud (More on our joint project below). I was impressed by the show, to say the least.

The sheer size, sprawl and industry participation at the conference made me realize the magnitude of the SAP ecosystem and the number of enterprise customers that depend on SAP to run their businesses. What struck me the most was the innovation at multiple levels: On the one hand, OEMs such as HP, IBM, SGI are leveraging Intel IvyBridge (Xeon E7-4890 v2) chipsets and building scale-out and scale-up systems focused on in-memory computing, with high core density systems (think 4096 cores) and 12TBytes of memory in a single memory pool, all optimized for HANA. On the other hand, a plethora of applications ranging from reporting, to real time analytics are rounding out the HANA ecosystem and providing value to enterprise customers. SAP is investing heavily in making it possible for partners to be successful with offerings built around HANA, with this goal of a strong ecosystem in mind.

And without a doubt, SAP is betting the farm on HANA and in the process re-defining themselves as a cloud company. Not only is SAP offering HANA as a service but it is has also created a model for other service providers to offer HANA as a service. The announcement by VMware to offer HANA as a vCloud service underscores the shift to cloud.

SAP COIL Labs / HANA, Vormetric, Virtustream and Intel joint project.

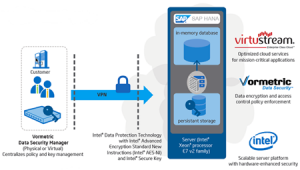

With three powerful trends playing out in the industry i.e. real time data analytics, cloud computing and increasing number of regulatory requirements for data protection, security becomes the catalyst for migrating enterprise workloads to the cloud. Technology partners SAP, Vormetric and Intel collaborated in a COIL project with two objectives:

- Secure HANA data and log volumes using Vormetric Transparent Encryption and Data Security Manager

- Demonstrate minimal performance overhead of encryption

Vormetric is very fortunate to have the deep technology partnership with Intel and now SAP COIL to demonstrate the value of Vormetric Data Security solutions in helping enterprise customers comply with regulatory requirements. By enabling customers to become the custodians of encryption keys and access control policies and by encrypting data in the cloud with very little overhead, Vormetric solutions complement the cloud security best practices and assure enterprise customers their data is secure and that they can comply with regulatory requirements.

The following graphic illustrates how this joint solution will protect HANA in the cloud.

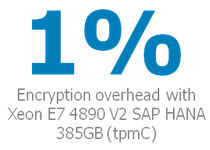

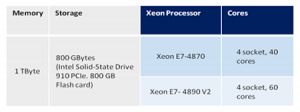

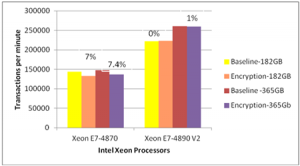

Testing result shown below highlight the performance improvementbetween last generation and present generation Intel Xeon Processors in this environment, as well as illustrate i that data-at-rest protection with encryption does not need to come with a penalty – With last generation processors overhead for this transaction intensive environment is 7%, and with current generation processors as little as 1%.

- For the same data set sizes E7-4890 v2 outperforms E7-4870 by ~75% (tpmC)

- When dataset size is doubled, the E7-4870 system is taxed only

an additional 0.4%) - For the E7-4890 v2 system:

- there is no encryption overhead with the 182 GB dataset (tpmC)

- encryption overhead is only 1% with365GB dataset (tpmC)

In this benchmarking exercise, with the given configuration and dataset size we could only drive the CPU utilization to about 37%. Driving the CPU utilization higher would require larger data sets, a more scalable storage configuration and more memory. While higher workloads might expose some other resource bottle necks these results lead to a hypothesis that the overhead due to encryption is likely to remain in single digit percentages. I suspect the low overhead is because of the “in memory” computing. Most of the data set is pre-fetched into memory, compressed and stored in columnar formats optimized for blazing fast analytics.

The results clearly illustrate that data-at-rest security doesn’t have to come with a performance hit, even in transaction heavy environments.

There is still more work to be done – Further joint efforts are planned to extend test results to include larger configurations, analytic and batch workloads, and more.

Ashvin Kamaraju | Vice President of Engineering, Strategy & Innovation

Ashvin Kamaraju | Vice President of Engineering, Strategy & Innovation