If you were to ask a lot of security professionals what they first think of when they hear the word “tokenization,” many would immediately reply “PCI DSS.” Those who’ve been down the path of a PCI audit know that by leveraging tokenization, they can take databases out of scope, which means they can reduce the time they spend dealing with QSAs, and save a lot of time and money in the process. Although PCI DSS compliance is still the primary driver for tokenization, Vormetric customers are telling us they see more opportunity to leverage tokenization than ever before.

If you were to ask a lot of security professionals what they first think of when they hear the word “tokenization,” many would immediately reply “PCI DSS.” Those who’ve been down the path of a PCI audit know that by leveraging tokenization, they can take databases out of scope, which means they can reduce the time they spend dealing with QSAs, and save a lot of time and money in the process. Although PCI DSS compliance is still the primary driver for tokenization, Vormetric customers are telling us they see more opportunity to leverage tokenization than ever before.

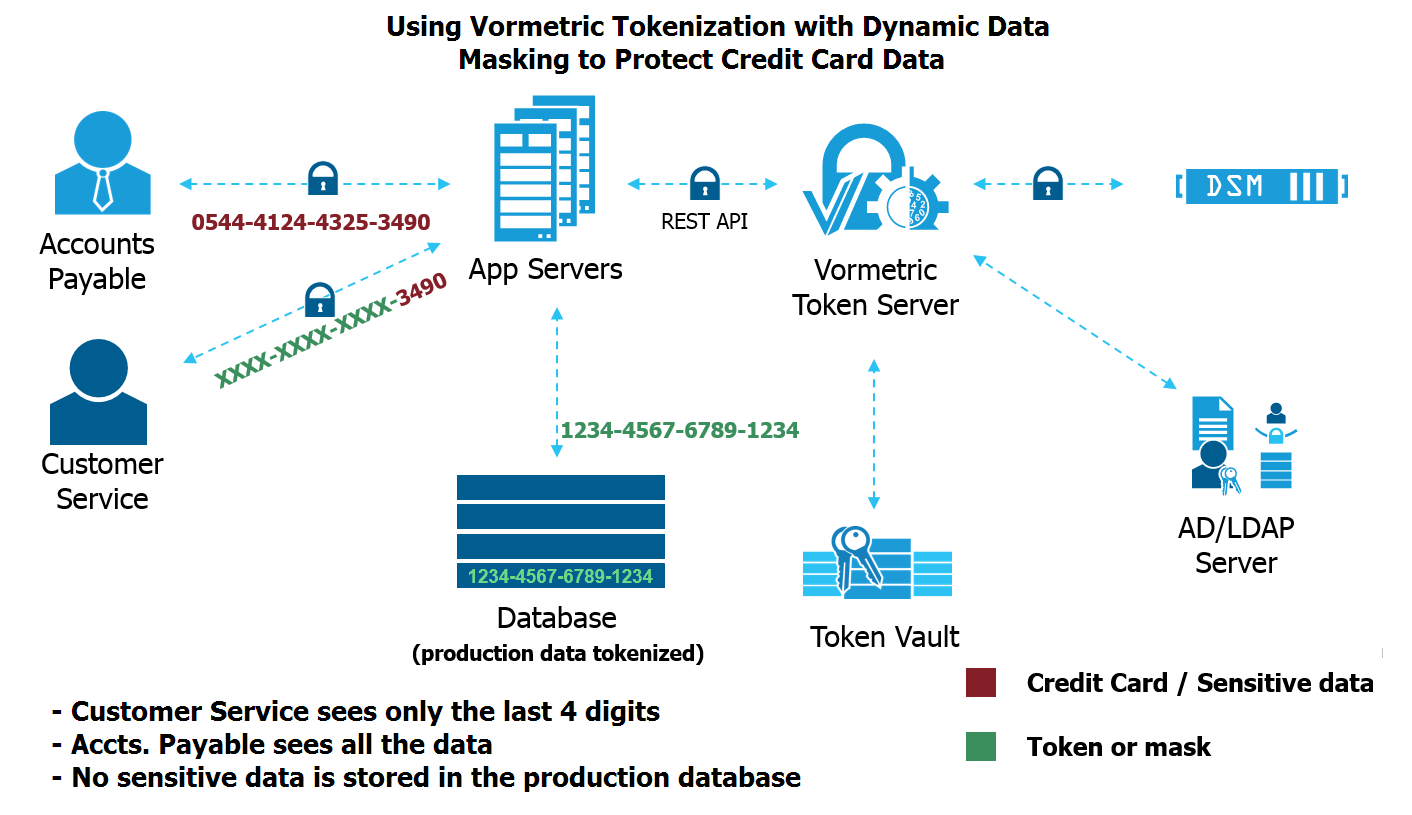

What’s tokenization? Without going into a full tutorial here, because we are lucky to have Wikipedia, I’ll just summarize. Tokenization is a form of data-centric security. It replaces sensitive production data with similar looking representations. For example, a tokenized credit card number will look like a real credit card number, but if it is stolen from the production database, it won’t be of any value to the thief. The actual production data is kept in a token vault and secured through encryption and other security practices. There are many techniques available for creating and managing tokens.

At the end of 2013, we sent a survey to over 100 customers, asking how they used Vormetric Transparent Encryption, our product for managing file-level encryption, privileged user access control, and security intelligence logging. In addition, we asked where they would like to see us take our product roadmap. We were surprised to see how many people responded that they would strongly consider Vormetric for tokenization solutions. In further conversations, we continued to hear the same message: Our customers trust the Vormetric Data Security Platform and they want us to continue to solve more data-centric security use cases, with tokenization being high on the list.

At the end of 2013, we sent a survey to over 100 customers, asking how they used Vormetric Transparent Encryption, our product for managing file-level encryption, privileged user access control, and security intelligence logging. In addition, we asked where they would like to see us take our product roadmap. We were surprised to see how many people responded that they would strongly consider Vormetric for tokenization solutions. In further conversations, we continued to hear the same message: Our customers trust the Vormetric Data Security Platform and they want us to continue to solve more data-centric security use cases, with tokenization being high on the list.

Why? It turns out that many of our customers had to bring in a second vendor to do tokenization, typically for reducing PCI DSS audit scope. This was very expensive, because it meant they had to deploy a second data security infrastructure and absorb all the capital and operational expenses that go with it.

Furthermore, with the explosive adoption of cloud services and big data analytics, new use cases were arising for controlling access in these environments. Again, these security teams were using Vormetric to protect the infrastructure, but in some cases they needed column-level security as well, like application-layer encryption or tokenization. Following are a few specific scenarios:

- A company is moving their database into a cloud environment in which they have no control of, or visibility into, the security infrastructure. The security team wants to protect sensitive data, both against theft from external hackers and from exposure to malicious or negligent cloud administrators, so they tokenize their data before it gets migrated.

- A business group needs to share a customer database with a big data analytics team. They don’t want to lose control of their sensitive data or violate security policies and compliance mandates surrounding PII and PHI. As a result, before the database is migrated, they tokenize this sensitive data so that it isn’t exposed.

- To adhere to relevant data jurisdiction laws, an international organization must ensure that subsets of personal data aren’t accessed by individuals outside of specific consumers’ countries of residence. By implementing tokenization, the security team can ensure that only authorized users based in a specified country can access the token vault and ultimately gain access to the consumer’s data.

I’m very proud to announce the availability of Vormetric Tokenization with Dynamic Data Masking. This solution offers two key capabilities. First, with its tokenization capabilities, the solution protects sensitive data at rest in production databases. Second, it offers dynamic data masking that enables role-based display security.

With dynamic data masking, security teams can establish granular policies so different levels of clear-text data are available depending on users’ roles. For example, a customer service representative at a call center who routinely asks customers, “What are the last four digits of your social security number?” may only be able to see those last four digits of the number, so they can verify a caller’s identity. At the same time, the redaction of the rest of the number significantly reduces the risk of identity theft. Of course, an authorized user that needs to see a full social security number to run a credit check will be able to do so.

However, the most exciting part of this solution is the economic benefit it offers our customers. Encryption keys for the new solution are managed through the Vormetric Data Security Manager, just as all other Vormetric products. The new Vormetric Token Server is a virtual appliance and is free. The solution is priced by the number of servers that are being protected simultaneously, which is a very similar pricing structure to other Vormetric products. What does this mean? Existing Vormetric customers can add tokenization for a fraction of the cost of bringing in a tokenization solution from another vendor. Plus, new customers can deploy a mix of file-level encryption, application-layer encryption, key management, and tokenization for 50-70% less than it would cost to use solutions from multiple vendors. That’s the power of the Vormetric Data Security Platform: As corny as it sounds, we are delivering a lot more for a lot less.

Click on this link to learn more about the new Vormetric Tokenization with Dynamic Data Masking solution or download the white paper here. If you have any questions or you want to see a solution demo, don’t hesitate to email info@vormetric.com.

Charles Goldberg | VP, product marketing

Charles Goldberg | VP, product marketing