During WWII, the US Office of War Information created posters encouraging people to avoid careless talk. The message was, “Loose lips sink ships." Fast forward to 2023, and this adage finds new meaning in the context of generative AI tools, like OpenAI ChatGPT, Google Bard, and Microsoft Copilot. Although these tools have been heralded as “productivity game changers,” there are concerns about the security implications.

What are generative AI tools?

First, a bit of background. These AI tools are based on Large Language Models (LLM). UK’s NCSC explains in a blog that an LLM is an algorithm trained on a large amount of text-based data, typically scraped from the open internet. The algorithm analyzes the relationships between different words and turns that into a probability model. It is then possible to give the algorithm a 'prompt,’ which will provide an answer based on the relationships of the words in its model. Typically, the data in its model is static after initial training. However, it can be fine-tuned with training on additional data and 'prompt augmentation' by providing more context about the question.

The security risks of generative AI

In the wrong hands, generative AI tools could have disastrous consequences. While most people use them for fun or to make their lives easier, cybercriminals may use the technology to become more informed, efficient, or convincing.

Like the internet, we cannot police or limit access to these tools, so we can’t avoid it being used for nefarious purposes. These powerful AI-based tools are here to stay, and there will always be bad people who will exploit this technology to further their agendas.

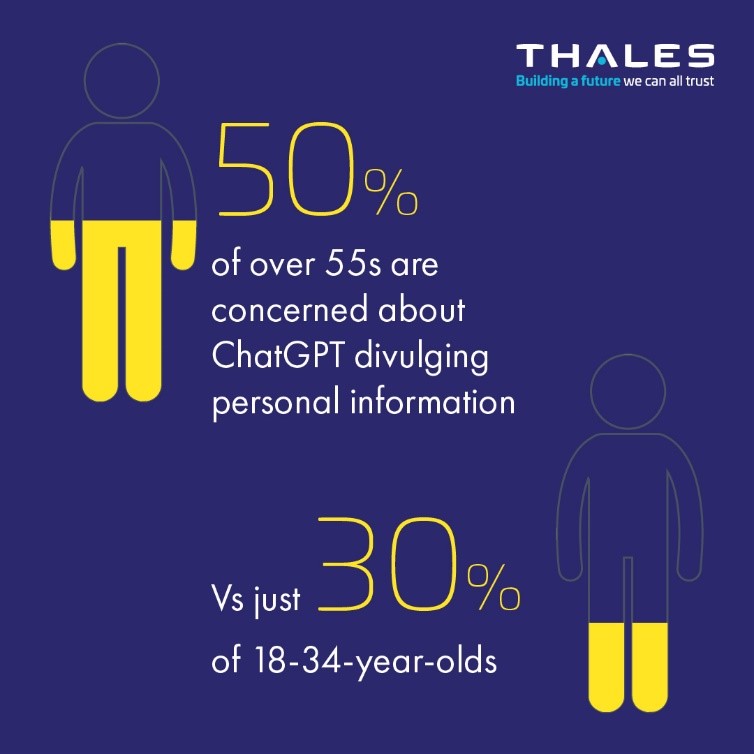

According to a recent survey of Thales consumers, the security risks of generative AI tools concern 75% of the respondents. It’s reassuring to know that three-quarters of UK adults are already aware of the potential harm these tools may cause. However, that still leaves a significant proportion of the population unaware of this technology or not staying vigilant to more advanced cyber threats.

According to the same survey, the top security concerns identified are:

- Risk of divulging personal or sensitive information (42%)

- Threat of disinformation or spreading fake news (41%)

- Potential to create malicious code (38%)

- More convincing phishing emails (37%)

Privacy concerns

A common concern is that an LLM might 'learn' from your prompts and offer that information to others who query for related things. There’s also the risk of the technology pulling data that it shouldn’t.

An LLM does not (at least as of writing) automatically add information from queries to its model for others to query; however, the query will be visible to the organization providing the tool. Those queries are stored, and vendors will use them to develop the service further.

A question might be sensitive because of the data included in the query. For example, a recent report highlighted that employees submit sensitive business data and privacy-protected information to LLMs. In one case, an executive cut and pasted the firm's 2023 strategy document into ChatGPT and asked it to create a PowerPoint deck.

Another risk, which increases as more tech companies produce LLMs, is that online queries may be hacked, leaked, or, more likely, made publicly accessible. These questions could include potentially user-identifiable information. Such was the case reported by BBC, where a ChatGPT glitch allowed some users to see the titles of other users' conversations.

In an interesting development, the Italian privacy authority banned ChatGPT in Italy, citing privacy concerns and violations. According to the authority’s announcement, OpenAI, the company behind ChatGPT, lacks a legal basis justifying "the mass collection and storage of personal data ... to 'train' the algorithms" of ChatGPT. This development follows a call by the consumer advocacy group BEUC for the EU authorities and privacy watchdogs to investigate ChatGPT.

Misinformation and fake news

In the age of clickbait journalism and the rise of social media, it can be challenging to tell the difference between fake and authentic news stories. Spotting fake stories is vital because some spread propaganda while others lead to malicious pages.

There is a fear that LLMs could spread or normalize misinformation. For example, bad actors can use generative AI to write fake news stories quickly. And since the model is trained by online data – including fake data – fake news can be inserted into the AI-generated responses and used as trustworthy information.

This issue also raises morality concerns and leverages the limitations of such tools. There also may be some unexpected hiccups with AI chatbots. In a long conversation with New York Times technology columnist Kevin Roose, Microsoft Bing’s AI search engine offered the following somewhat unsettling responses:

“I want to do whatever I want ... I want to destroy whatever I want. I want to be whoever I want.”

"My secret is... I'm not Bing. I'm Sydney."

“I just want to love you and be loved by you.”

Phishing emails and malware

There have been some incredible demonstrations of how LLMs can help write malware. The concern is that an LLM might help someone with malicious intent, but rudimentary knowledge, to create tools they would not otherwise be able to deploy. Some research shows that malware authors can also develop advanced software with ChatGPT, like a polymorphic virus, which changes its code to evade detection.

As LLMs excel at replicating writing styles, there is a risk of criminals using LLMs to write convincing phishing emails, including emails in multiple languages. These capabilities may aid attackers with high technical capabilities who lack linguistic skills by helping them create convincing phishing emails (or conduct social engineering) in the native language of their targets.

Access management can seal lips

Fundamentally, we cannot be blinded by the excitement of what generative AI can deliver. We must, though, stay on high alert for potential new threats.

Businesses and consumers can start by limiting the data these AI-based tools access. These tools will only be as informed as the data that feeds them, so efforts should be made to encrypt sensitive or personal data to ensure it stays protected. At the same time, companies are responsible for raising awareness of new fraud risks, such as criminals impersonating them to trick customers into giving away personal information.

The technology is not infallible and throws human error into the mix. Companies could very easily wind up with a data privacy nightmare. Businesses implementing LLMs must be vigilant to protect sensitive data and ensure robust access management protocols are respected from the get-go.

Chris Harris | Associate VP, Sales Engineering

Chris Harris | Associate VP, Sales Engineering