Data Protection Questions & Answers

Enterprises and government entities are committed to digital transformation. For them to succeed, information must be trustworthy and reliable. This is why keeping data secure throughout its lifecycle has become a critical priority.

Thales has for over three decades been a leader in digital security, including encryption; encryption key and "secrets" management; hardware security modules (HSMs); and signing, certificates, and stamping. This Q&A offers brief, accurate definitions of key terms in each of these areas of digital security practice, as well as links for further reading.

What is Encryption Key Management?

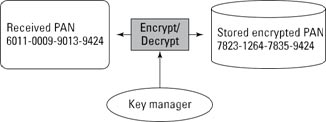

Encryption is a process that uses algorithms to encode data as ciphertext. This ciphertext can only be made meaningful again, if the person or application accessing the data has the data encryption keys necessary to decode the ciphertext. So, if the data is stolen or accidentally shared, it is protected because it is indecipherable, thanks to data encryption.

Controlling and maintaining data encryption keys is an essential part of any data encryption strategy, because, with the encryption keys, a cybercriminal can return encrypted data to its original unencrypted state. An encryption key management system includes generation, exchange, storage, use, destruction and replacement of encryption keys.

According to Securosis’s White Paper, "Pragmatic Key Management for Data Encryption":

- Many data encryption systems don’t bother with “real” key management – they only store data encryption keys locally, and users never interact with the keys directly. Super-simple implementations don’t bother to store the key at all – it is generated as needed from the passphrase. In slightly more complex (but still relatively simple) cases the encryption key is actually stored with the data, protected by a series of other keys which are still generated from passphrases.

- There is a clear division between this and the enterprise model, where you actively manage keys. Key management involves separating keys from data for increased flexibility and security. You can have multiple keys for the same data, the same key for multiple files, key backup and recovery, and many more choices.

Best practice is to use a dedicated external key management system. There are four types1 :

1. An HSM or other hardware key management appliance, which provides the highest level of physical security

2. A key management virtual appliance

3. Key management software, which can run either on a dedicated server or within a virtual/cloud server

4. Key Management Software as a Service (SaaS)

Related Articles

Secure your data, comply with regulatory and industry standards, and protect your organization’s reputation. Learn how Thales can help.

- Unified Key Management Solutions

- Cracking the Confusion: Encryption and Tokenization for Data Centers, Servers and Applications by Securosis – White Paper

- Thales Key Management– White Paper

- Own and Manage your Encryption Keys– White Paper

- Best Practices for Cloud Data Protection and Key Management– White Paper

- Securing Microsoft Office365 and other Azure applications with CipherTrust Key Broker for Azure – White Paper

- The Importance of KMIP Standard for Centralized Key Management – White Paper

- Enterprise Key Management Solutions – Solution Brief

- CipherTrust Cloud Key Manager Introduction -- Video

- Enterprise Key Management Solutions -- Solution Brief

What is a Centralized Key Management System?

As organizations deploy ever-increasing numbers of encryption solutions, they find themselves managing inconsistent policies, different levels of protection, and experience escalating costs. The best way through this maze is often to transition into a centralized encryption key management system. In this key management case, and in contrast to the use of hardware security modules (HSMs), the key management system performs only key management tasks, acting on behalf of other systems that perform cryptographic operations using those keys.

The benefits of a centralized key management system include:

- Unified key management and encryption policies

- System-wide key revocation

- A single point to protect

- Cost reduction through automation

- Consolidated audit information

- A single point for recovery

- Convenient separation of duty

- Key mobility

Related Articles

Secure your data, comply with regulatory and industry standards, and protect your organization’s reputation. Learn how Thales can help.

- Unified Key Management Solutions

- Cracking the Confusion: Encryption and Tokenization for Data Centers, Servers and Applications by Securosis – White Paper

- Thales Key Management – White Paper

- Own and Manage your Encryption Keys – White Paper

- Best Practices for Cloud Data Protection and Key Management – White Paper

- Securing Microsoft Office365 and other Azure applications with CipherTrust Key Broker for Azure – White Paper

- The Importance of KMIP Standard for Centralized Key Management – White Paper

- Enterprise Key Management Solutions – Solution Brief

- CipherTrust Cloud Key Manager Introduction -- Video

- Enterprise Key Management Solutions -- Solution Brief

What is Storage Encryption?

Storage encryption is the use of encryption for data both in transit and on storage media. Data is encrypted while it passes to storage devices, such as individual hard disks, tape drives, or the libraries and arrays that contain them. Using storage level encryption along with database and file encryption goes a long way toward offsetting the risk of losing your data. Like network encryption, storage encryption is a relatively blunt instrument, typically protecting all the data on each tape or disk regardless of the type or sensitivity of the data.

Storage encryption is a good way to ensure your data is safe, if it is lost. However, it is considered to be more secure to encrypt data in databases at the level of individual files, volumes, or columns. This may even be required for compliance, if data is shared with other users or is subject to specific audit requirements.

Related Articles

Secure your data at rest, comply with regulatory and industry standards and protect your organization’s reputation. Learn how Thales can help:

- Data at Rest Encryption

- CipherTrust Data Security Platform

- CipherTrust Transparent Encryption -- White Paper

- CipherTrust Transparent Encryption -- Product Brief

- Selecting the Right Encryption Approach

- The Key Pillars for Protecting Sensitive Data -- White Paper

- The Enterprise Encryption Blueprint -- White Paper

- Encrypt Everything -- eBook

What is Bring Your Own Key (BYOK)?

While cloud computing offers many advantages, a major disadvantage has been security, because data physically resides with the cloud service provider (CSP) and out of the direct control of the owner of the data. For enterprises that elect to use encryption to protect their data, securing their encryption keys is of paramount importance.

Bring Your Own Key (BYOK) is an encryption key management system that allows enterprises to encrypt their data and retain control and management of their encryption keys. However, some BYOK plans upload the encryption keys to the CSP infrastructure. In these cases, the enterprise has once again forfeited control of its keys.

A best-practice solution to this "Bring Your Own Key" problem is for the enterprise to generate strong keys in a tamper-resistant hardware security module (HSM) and control the secure export of its keys to the cloud, thereby strengthening its key management practices.

Related Articles

Secure your data, comply with regulatory and industry standards, and protect your organization’s reputation. Learn how Thales can help.

What is FIPS 140-2?

FIPS (Federal Information Processing Standard) 140-2 is the benchmark for validating the effectiveness of cryptographic hardware. If a product has a FIPS 140-2 certificate you know that it has been tested and formally validated by the U.S. and Canadian Governments. Although FIPS 140-2 is a U.S./Canadian Federal standard, FIPS 140-2 compliance has been widely adopted around the world in both governmental and non-governmental sectors as a practical security benchmark and realistic best practice.

Organizations use the FIPS 140-2 standard to ensure that the hardware they select meets specific security requirements. The FIPS certification standard defines four increasing, qualitative levels of security:

Level 1: Requires production-grade equipment and externally tested algorithms.

Level 2: Adds requirements for physical tamper-evidence and role-based authentication. Software implementations must run on an Operating System approved to Common Criteria at EAL2.

Level 3: Adds requirements for physical tamper-resistance and identity-based authentication. There must also be physical or logical separation between the interfaces by which “critical security parameters” enter and leave the module. Private keys can only enter or leave in encrypted form.

Level 4: This level makes the physical security requirements more stringent, requiring the ability to be tamper-active, erasing the contents of the device if it detects various forms of environmental attack.

The FIPS 140-2 standard technically allows for software-only implementations at level 3 or 4 but applies such stringent requirements that none have been validated.

For many organizations, requiring FIPS certification at FIPS 140 level 3 is a good compromise between effective security, operational convenience, and choice in the marketplace.

Related Articles

Secure your data, comply with regulatory and industry standards, and protect your organization’s reputation. Learn how Thales can help.

What is DNSSEC?

The domain name system (DNS) is effectively the Internet’s address book; it enables website names to be matched to their corresponding registered IP addresses. But illicit alteration of web queries can point end users or services to rogue IP addresses and route them to illegitimate servers for the purpose of data theft. The Domain Name System Security Extensions (DNSSEC) have been created in response to this threat. DNSSEC is a mechanism that involves the use of digital signatures to enable servers to authenticate and verify the integrity of DNS responses to queries.

The Role of Hardware Security Modules

Hardware Security Modules (HSMs) enable top level domains (TLDs), registrars, registries, and enterprises to secure critically important signing processes used to validate the integrity of DNSSEC responses across the Internet. They protect the DNS from what are commonly referred to as “cache poisoning” and “man-in-the-middle” attacks. HSMs provide proven and auditable security advantages, enabling proper generation and storage for signing keys to assure the integrity of the DNSSEC validation process.

Related Articles

Secure your data, comply with regulatory and industry standards, and protect your organization’s reputation. Learn how Thales can help.

What is a Credentials Management System?

Organizations require user credentials to control access to sensitive data. Deploying a sound credential management system—or several credential management systems—is critical to secure all systems and information. Authorities must be able to create and revoke credentials as customers and employees come and go, change roles, and as business processes and policies evolve. Furthermore, the rise of privacy regulations and other security mandates increases the need for organizations to demonstrate the ability to validate the identity of online consumers and internal privileged users.

Challenges Associated with Credential Management

- Attackers who gain control of your credential management system can issue credentials that make them an insider, potentially with privileges to compromise systems undetected.

- Compromised credential management processes result in the need to re-issue credentials, which can be an expensive and time-consuming process.

- Credential validation rates can vary enormously and can easily outpace the performance characteristics of a credential management system, jeopardizing business continuity.

- Business application owners’ expectations around security and trust models are rising and can expose credential management as a weak link that may jeopardize compliance claims.

Hardware Security Modules (HSMs)

Hardware Security Modules (HSMs) are hardened, tamper-resistant hardware devices that strengthen encryption practices by generating keys, encrypting and decrypting data, and creating and verifying digital signatures. Some hardware security modules (HSMs) are certified at various FIPS 140-2 Levels.

While it’s possible to deploy a credential management platform in a purely software-based system, this approach is inherently less secure. Token signing and encryption keys handled outside the cryptographic boundary of a certified HSM are significantly more vulnerable to attacks that could compromise the token signing and distribution process. HSMs are the only proven and auditable way to secure valuable cryptographic material and deliver FIPS-approved hardware protection.

HSMs enable your enterprise to:

- Secure token signing keys within carefully designed cryptographic boundaries, employing robust access control mechanisms with enforced separation of duties in order to ensure that keys are only used by authorized entities

- Ensure availability by using sophisticated key management, storage and redundancy features

- Deliver high performance to support increasingly demanding enterprise requirements for access to resources from different devices and locations

Related Articles

Secure your data, comply with regulatory and industry standards, and protect your organization’s reputation. Learn how Thales can help.

What is Key Management Interoperability Protocol (KMIP)?

According to OASIS (Organization for the Advancement of Structured Information Standards), “KMIP enables communication between key management systems and cryptographically-enabled applications, including email, databases, and storage devices.”

KMIP simplifies the way companies manage cryptographic keys, eliminating the need for redundant, incompatible key management processes. Key lifecycle management — including the generation, submission, retrieval, and deletion of cryptographic keys — is enabled by the standard. Designed for use by both legacy and new cryptographic applications, KMIP supports many kinds of cryptographic objects, including symmetric keys, asymmetric keys, digital certificates, and authentication tokens.

KMIP was developed by OASIS, which is a global nonprofit consortium that works on the development, convergence, and adoption of standards for security, Internet of Things, energy, content technologies, emergency management, and other areas.

Related Articles

Secure your data, comply with regulatory and industry standards, and protect your organization’s reputation. Learn how Thales can help.

- The Importance of KMIP Standard for Centralized Key Management -- White Paper

- Unified Key Management Solutions

- Cracking the Confusion: Encryption and Tokenization for Data Centers, Servers and Applications by Securosis – White Paper

- Thales Key Management – White Paper

- Own and Manage your Encryption Keys – White Paper

- Best Practices for Cloud Data Protection and Key Management – White Paper

- Enterprise Key Management Solutions– Solution Brief

- CipherTrust Cloud Key Manager Introduction-- Video

- Enterprise Key Management Solutions– Solution Brief

What is an Asymmetric Key or Asymmetric Key Cryptography?

Asymmetric keys are the foundation of Public Key Infrastructure (PKI) a cryptographic scheme requiring two different keys, one to lock or encrypt the plaintext, and one to unlock or decrypt the cyphertext. Neither key will do both functions. One key is published (public key) and the other is kept private (private key). If the lock/encryption key is the one published, the system enables private communication from the public to the unlocking key's owner. If the unlock/decryption key is the one published, then the system serves as a signature verifier of documents locked by the owner of the private key. This system also is called asymmetric key cryptography.

Related Articles

Secure your data, comply with regulatory and industry standards, and protect your organization’s reputation. Learn how Thales can help.

- The Evolution of Encryption –Blog Post

- What is Public Key Infrastructure (PKI)?

- Unified Key Management Solutions

- Cracking the Confusion: Encryption and Tokenization for Data Centers, Servers and Applications – White Paper by Securosis

- Thales Key Management – White Paper

- Own and Manage your Encryption Keys – White Paper

- Best Practices for Cloud Data Protection and Key Management– White Paper

- Enterprise Key Management Solutions– Solution Brief

- CipherTrust Cloud Key Manager Introduction-- Video

- Enterprise Key Management Solutions– Solution Brief

What is a Symmetric Key?

In cryptography, a symmetric key is one that is used both to encrypt and decrypt information. This means that to decrypt information, one must have the same key that was used to encrypt it. The keys, in practice, represent a shared secret between two or more parties that can be used to maintain a private information link. This requirement that both parties have access to the secret key is one of the main drawbacks of symmetric key encryption, in comparison to public-key encryption.

Asymmetric encryption, on the other hand, uses a second, different key to decrypt information. (See “What is an Asymmetric Key or Asymmetric Key Cryptography?”)

Related Articles

Secure your data, comply with regulatory and industry standards, and protect your organization’s reputation. Learn how Thales can help.

- The Evolution of Encryption –Blog Post

- What is Public Key Infrastructure (PKI)?

- Unified Key Management Solutions

- Cracking the Confusion: Encryption and Tokenization for Data Centers, Servers and Applications – White Paper by Securosis

- Thales Key Management – White Paper

- Own and Manage your Encryption Keys - White Paper

- Best Practices for Cloud Data Protection and Key Management – White Paper

- Enterprise Key Management Solutions – Solution Brief

- CipherTrust Cloud Key Manager Introduction -- Video

- Enterprise Key Management Solutions -- Solution Brief

What is the Encryption Key Management Lifecycle?

The task of key management is the complete set of operations necessary to create, maintain, protect, and control the use of cryptographic keys. Keys have a life cycle; they’re created, live useful lives, and are retired. The typical encryption key lifecycle likely includes the following phases:

- Key generation

- Key registration

- Key storage

- Key distribution and installation

- Key use

- Key rotation

- Key backup

- Key recovery

- Key revocation

- Key suspension

- Key destruction

Defining and enforcing encryption key management policies affects every stage of the key management life cycle. Each encryption key or group of keys needs to be governed by an individual key usage policy defining which device, group of devices, or types of application can request it, and what operations that device or application can perform — for example, encrypt, decrypt, or sign. In addition, encryption key management policy may dictate additional requirements for higher levels of authorization in the key management process to release a key after it has been requested or to recover the key in case of loss.

Related Articles

Secure your data, comply with regulatory and industry standards, and protect your organization’s reputation. Learn how Thales can help.

- Unified Key Management Solutions

- Cracking the Confusion: Encryption and Tokenization for Data Centers, Servers and Applications by Securosis – White Paper

- Thales Key Management– White Paper

- Own and Manage your Encryption Keys– White Paper

- Best Practices for Cloud Data Protection and Key Management– White Paper

- Securing Microsoft Office365 and other Azure applications with CipherTrust Key Broker for Azure– White Paper

- The Importance of KMIP Standard for Centralized Key Management– White Paper

- Enterprise Key Management Solutions – Solution Brief

- CipherTrust Cloud Key Manager Introduction-- Video

- Enterprise Key Management Solutions– Solution Brief

What is a General Purpose Hardware Security Module (HSM)?

Hardware Security Modules (HSMs) are hardened, tamper-resistant hardware devices that strengthen encryption practices by generating keys, encrypting and decrypting data, and creating and verifying digital signatures. Some hardware security modules (HSMs) are certified at various FIPS 140-2 Levels. Hardware security modules (HSMs) are frequently used to:

- Meet and exceed established and emerging regulatory standards for cybersecurity

- Achieve higher levels of data security and trust

- Maintain high service levels and business agility

Find out how general purpose HSMs balance security, high performance and usability.

What is a Payment Hardware Security Module (HSM)?

A payment HSM is a hardened, tamper-resistant hardware device that is used primarily by the retail banking industry to provide high levels of protection for cryptographic keys and customer PINs used during the issuance of magnetic stripe and EMV chip cards (and their mobile application equivalents) and the subsequent processing of credit and debit card payment transactions. Payment HSMs normally provide native cryptographic support for all the major card scheme payment applications and undergo rigorous independent hardware certification under global schemes such as FIPS 140-2, PCI HSM and other additional regional security requirements such as MEPS in France and APCA in Australia for example.

Some of their common use cases in the payments ecosystem include:

- PIN generation, management and validation

- PIN block translation during the network switching of ATM and POS transactions

- Card, user and cryptogram validation during payment transaction processing

- Payment credential issuing for payment cards and mobile applications

- Point-to-point encryption (P2PE) key management and secure data decryption

- Sharing keys securely with third parties to facilitate secure communications

Related Articles

What Is Remote HSM Management?

Remote hardware security module (HSM) management enables security teams to perform tasks linked to key and device management from a central remote location, avoiding the need to travel to the data center. A remote HSM management solution delivers operational cost savings in addition to making the task of managing HSMs more flexible and on-demand.

Depending on the HSM solution used, remote HSM management enables:

- Greater, more flexible control

- Strong access control based on digital credentials rather than physical keys

- Stronger audit controls from tracking activities to individual card credentials

- Quicker identification of remote HSM status issues

- Simpler software and license upgrade installation

- Reduced risk of errors

- Simplified logistics

Related Articles

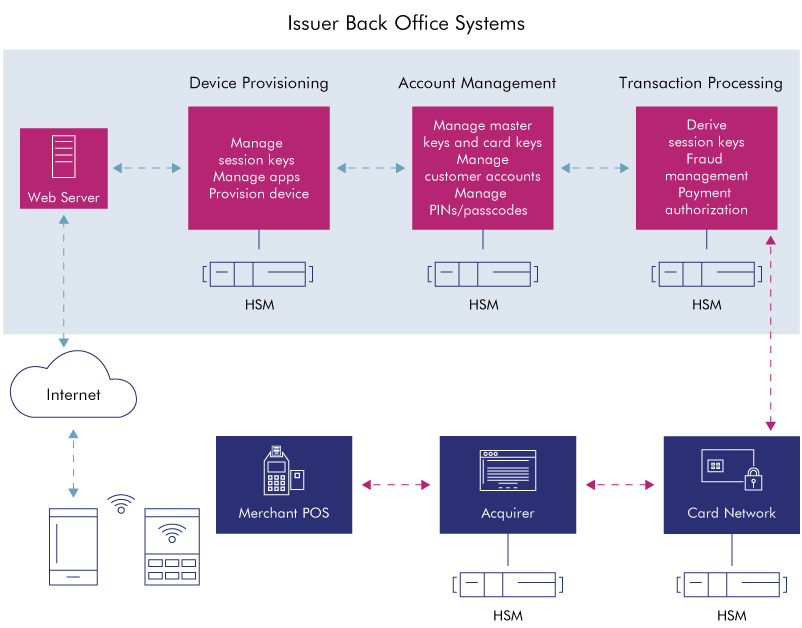

What is Host Card Emulation (HCE)?

Host card emulation (HCE) is a technology for securing a mobile phone such that it can be used to make credit or debit transactions at a physical point-of-sale (POS) terminals. With HCE, critical payment credentials are stored in a secure shared repository (the issuer data center or private cloud) rather than on the phone. Limited use credentials are delivered to the phone in advance to enable contactless transactions to take place.

This approach eliminates the need for Trusted Service Managers (TSMs) and shifts control back to the banks. However, it brings with it a different set of security and risk challenges.

- A centralized service to store many millions of payment credentials or create one-time use credentials on demand creates an obvious point of attack. Although banks have issued cards for years, those systems have largely been offline and have not requiring round-the-cloud interaction with the payment token (in this case a plastic card). HCE requires these services to be online and accessible in real-time as part of individual payment transactions. Failure to protect these service platforms places the issuer at considerable risk of fraud.

- Although the phone no longer stores payment credentials, it still plays three critical security roles, all of which create opportunities for theft or substitution of credentials or transaction information.

- It provides the means for applications to request card data stored in the HCE service.

- It is the method by which a user is authenticated and authorizes the service to provide the payments credentials.

- It provides the communications channel over which payment credentials are passed to the POS terminal.

- All mobile payments schemes are more complex than traditional card payments, yet smart phone user expectations are extremely high.

- Poor mobile network coverage can make HCE services inaccessible.

- Complex authentication schemes lead to errors.

- Software or hardware incompatibility can stop transactions.

Related Articles

What is Root of Trust?

Root of Trust (RoT) is a source that can always be trusted within a cryptographic system. Because cryptographic security is dependent on keys to encrypt and decrypt data and perform functions such as generating digital signatures and verifying signatures, RoT schemes generally include a hardened hardware module. A principal example is the hardware security module (HSM) which generates and protects keys and performs cryptographic functions within its secure environment.

Because this module is for all intents and purposes inaccessible outside the computer ecosystem, that ecosystem can trust the keys and other cryptographic information it receives from the root of trust module to be authentic and authorized. This is particularly important as the Internet of Things (IoT) proliferates, because to avoid being hacked, components of computing ecosystems need a way to determine information they receive is authentic. The RoT safeguards the security of data and applications and helps to build trust in the overall ecosystem.

RoT is a critical component of public key infrastructures (PKIs) to generate and protect root and certificate authority keys; code signing to ensure software remains secure, unaltered and authentic; and creating digital certificates for credentialing and authenticating proprietary electronic devices for IoT applications and other network deployments.

What is a Digital Certificate?

Digital certificates are the credentials that facilitate the verification of identities between users in a transaction. Much as a passport certifies one’s identity as a citizen of a country, the purpose of a digital certificate is to establish the identity of users within the ecosystem. Because digital certificates are used to identify the users to whom encrypted data is sent, or to verify the identity of the signer of information, protecting the authenticity and integrity of the certificate is imperative in order to maintain the trustworthiness of the system. In order to bind public keys with their associated user (owner of the private key), public key infrastructures (PKIs) use digital certificates.

What is a Certificate Authority?

A Certificate Authority (CA) is the core component of a public key infrastructure (PKI) responsible for establishing a hierarchical chain of trust. CAs issue the digital credentials used to certify the identity of users. CAs underpin the security of a PKI and the services they support, and therefore can be the focus of sophisticated targeted attacks. In order to mitigate the risk of attacks against Certificate Authorities, physical and logical controls as well as hardening mechanisms, such as hardware security modules (HSMs) have become necessary to ensure the integrity of a PKI.

What is Code Signing?

In public key cryptography, code signing is a specific use of certificate-based digital signatures that enables an organization to verify the identity of the software publisher and certify the software has not been changed since it was published.

Digital signatures provide a proven cryptographic process for software publishers and in-house development teams to protect their end users from cybersecurity dangers, including advanced persistent threats (APTs), such as Duqu 2.0. Digital signatures ensure software integrity and authenticity. Digital signatures enable end users to verify publisher identities while simultaneously validating that the installation package has not been changed since it was signed. All modern operating systems look for and validate digital signatures during installation, and warnings about unsigned code can cause end users to abandon installation.

What is a Digital Signature?

Digital signatures provide a proven cryptographic process for software publishers and in-house development teams to protect their end users from cybersecurity dangers, including advanced persistent threats (APTs), such as Duqu 2.0. Digital signatures ensure the integrity and authenticity of software and documents by enabling end users to verify publisher identities while validating that the code or document has not been changed since it was signed.

Digital signatures go beyond electronic versions of traditional signatures by invoking cryptographic techniques to dramatically increase security and transparency, both of which are critical to establishing trust and legal validity. As an application of public key cryptography, digital signatures can be applied in many different settings, from a citizen filing an online tax return, to a procurement officer executing a contract with a vendor, to an electronic invoice, to a software developer publishing updated code.

What is Time Stamping?

Time stamping is an increasingly valuable complement to digital signing practices, enabling organizations to record when a digital item—such as a message, document, transaction or piece of software—was signed. For some applications, the timing of a digital signature is critical, as in the case of stock trades, lottery ticket issuance and some legal proceedings. Even when time is not intrinsic to the application, time stamping is helpful for record keeping and audit processes, because it provides a mechanism to prove whether the digital certificate was valid at the time it was used. The growing importance of digital signing solutions has created a corresponding demand for time stamping, so many software programs, such as Microsoft Office, support time stamping capabilities.

The Importance of Security

If time stamping is to add real value, the time stamp must be secure.

Risks Associated with Insecure Time Stamping

- The inability to trust electronic processes can result in costly paper trails to back up electronic records.

- By manipulating a computer clock, an attacker can easily compromise a software-based time stamping process—thereby invalidating the overall signing process.

- Insecure time stamping or digital signing processes can expose organizations to compliance problems and legal challenges.

- Even after private signing keys and certificates have been revoked, users can still have access to them. Without time stamping, organizations cannot prove whether signatures were created before or after a certificate was revoked.

What is PKI?

The Public key infrastructure (PKI) is the set of hardware, software, policies, processes, and procedures required to create, manage, distribute, use, store, and revoke digital certificates and public-keys. PKIs are the foundation that enables the use of technologies, such as digital signatures and encryption, across large user populations. PKIs deliver the elements essential for a secure and trusted business environment for e-commerce and the growing Internet of Things (IoT).

PKIs help establish the identity of people, devices, and services – enabling controlled access to systems and resources, protection of data, and accountability in transactions. Next generation business applications are becoming more reliant on PKI technology to guarantee high assurance, because evolving business models are becoming more dependent on electronic interaction requiring online authentication and compliance with stricter data security regulations.

The Role of Certificate Authorities (CAs)

In order to bind public keys with their associated user (owner of the private key), PKIs use digital certificates. Digital certificates are the credentials that facilitate the verification of identities between users in a transaction. Much as a passport certifies one’s identity as a citizen of a country, the digital certificate establishes the identity of users within the ecosystem. Because digital certificates are used to identify the users to whom encrypted data is sent, or to verify the identity of the signer of information, protecting the authenticity and integrity of the certificate is imperative to maintain the trustworthiness of the system.

Certificate authorities (CAs) issue the digital credentials used to certify the identity of users. CAs underpin the security of a PKI and the services they support, and therefore can be the focus of sophisticated targeted attacks. In order to mitigate the risk of attacks against CAs, physical and logical controls as well as hardening mechanisms, such as hardware security modules (HSMs) have become necessary to ensure the integrity of a PKI.

PKI Deployment

PKIs provide a framework that enables cryptographic data security technologies such as digital certificates and signatures to be effectively deployed on a mass scale. PKIs support identity management services within and across networks and underpin online authentication inherent in secure socket layer (SSL) and transport layer security (TLS) for protecting internet traffic, as well as document and transaction signing, application code signing, and time-stamping. PKIs support solutions for desktop login, citizen identification, mass transit, mobile banking, and are critically important for device credentialing in the IoT. Device credentialing is becoming increasingly important to impart identities to growing numbers of cloud-based and internet-connected devices that run the gamut from smart phones to medical equipment.

Cryptographic Security

Using the principles of asymmetric and symmetric cryptography, PKIs facilitate the establishment of a secure exchange of data between users and devices – ensuring authenticity, confidentiality, and integrity of transactions. Users (also known as “Subscribers” in PKI parlance) can be individual end users, web servers, embedded systems, connected devices, or programs/applications that are executing business processes. Asymmetric cryptography provides the users, devices or services within an ecosystem with a key pair composed of a public and a private key component. A public key is available to anyone in the group for encryption or for verification of a digital signature. The private key on the other hand, must be kept secret and is only used by the entity to which it belongs, typically for tasks such as decryption or for the creation of digital signatures.

The Increasing Importance of PKIs

With evolving business models becoming more dependent on electronic transactions and digital documents, and with more Internet-aware devices connected to corporate networks, the role of a PKI is no longer limited to isolated systems such as secure email, smart cards for physical access or encrypted web traffic. PKIs today are expected to support larger numbers of applications, users and devices across complex ecosystems. And with stricter government and industry data security regulations, mainstream operating systems and business applications are becoming more reliant than ever on an organizational PKI to guarantee trust.

Learn more how PKIs secure digital applications and validate everything from transactions and identities to supply chains.

What is certification authority or root private key theft?

The theft of certification authority (CA) or root private keys enables an attacker to take over an organization’s public key infrastructure (PKI) and issue bogus certificates, as was done in the Stuxnet attack. Any such compromise may force revocation and reissuance of some or all of the previously issued certificates. A root compromise, such as a stolen root private key, destroys the trust of your PKI and can easily drive you to reestablish a new root and subsidiary issuing CA infrastructure. This can be very expensive in addition to damaging to an enterprise’s corporate identity.

The integrity of an organization’s private keys, throughout the infrastructure from root to issuing CAs, provides the core trust foundation of its PKI and, as such, must be safeguarded. The recognized best practice for securing these critical keys is to use a FIPS 140-2 Level 3 certified hardware security module (HSM), a tamper-resistant device that meets the highest security and assurance standards.

What is inadequate separation (segregation) of duties for PKIs?

Weak controls over the use of signing keys can enable the certification authority (CA) to be misused, even if the keys themselves are not compromised. A malicious actor might issue malicious certificates that allow a device or user to impersonate a legitimate user and conduct a man in the middle attack, or to digitally sign malware that is then propagated, because it appears to come from a trusted source.

Proper security controls need to be established when designing an organization’s public key infrastructure (PKI). This includes separating CA roles and setting policies so that the operation fails if an individual attempts to perform more than one CA role. Setting up policies and procedures to ensure proper separation of duties, including establishing contingencies when a team member leaves, is critical to the security and integrity of the PKI and must be part of the initial design. It is preferable to implement a technology that enables a technical solution to the separation of duties policy. For example, presentation of an “M of N” smart card set can enforce a robust separation of duties policy by simply not allowing an individual to issue certificates without the presence of, for example, a Security Officer.

What is insufficient scalability in a PKI?

A public key infrastructure (PKI) that fails to factor in the growth of the organization and its users will eventually need to be redesigned as the business scales, meaning lost productivity and customer impact. With new applications coming online daily and many users demanding access via multiple devices, good business planning requires that PKI scalability be considered from the outset.

Many organizations will need more than one certification authority (CA) to meet their growing requirements — certificates are used for logon authentication, digital document signing, email, and more. A root CA can act as the “master” with multiple subordinate CAs covering the various use cases. Alternatively, the organization can plan for scale by establishing multiple root CAs and multiple hierarchies. Regardless of the strategy, the goal is to get it right the first time to ensure an organization’s PKI can keep up with its growing needs.

What is subversion of online certificate validation?

Subversion of online certificate validation processes can enable malicious use of revoked certificates. An attacker who can prevent a certificate from reaching the certificate revocation list can impersonate a legitimate actor and execute malicious activity, while the victim is unaware that he/it is communicating with an illegitimate participant.

Defining certificate authentication policies and procedures is an instrumental part of a public key infrastructure’s (PKI) design. Further, proper execution and enforcement will ensure that revoked certificates — and users — are denied access. While many organizations will use a certificate revocation list (CRL), some might opt for a different approach, such as online certificate status protocol (OCSP) or authentication, authorization and accounting (AAA).

Such decisions need to be part of the initial design discussions based on the needs of the organization. It is worth noting that any private keys deployed in the certificate revocation process need to be protected equally with the keys that form the basis of the issuing process.

What is lack of trust and non-repudiation in a PKI?

A public key infrastructure (PKI) with inadequate security, especially referencing key management, exposes the organization to loss or disruptions, if the organization cannot legally verify that a message was sent by a specific user.

A PKI built with security and integrity at its core can provide you with legal protection in instances, when user activity is in dispute. The secure digital signature provides irrefutable evidence of the message’s sender as well as the time it was sent, but it is only as defendable as the PKI is strong. By demonstrating that signing keys are adequately protected all the way back to the root key, your organization can withstand any legal challenge about the authenticity of a specific user and their actions.

What is GDPR (General Data Protection Regulation)?

Perhaps the most comprehensive data privacy standard to date, the GDPR presents a significant challenge for organizations that process the personal data of EU citizens – regardless of where the organization is headquartered.

Effective as of May 2018, the EU’s General Data Protection Regulation (GDPR) is designed to improve personal data protections and increase organizational accountability for data breaches. With potential fines of up to four percent of global revenues or 20 million EUR (whichever is higher), the GDPR certainly has teeth. No matter where your organization is located, if it processes or controls the personal data of EU residents, it must be in compliance with GDPR, or it will be liable to significant fines and the requirement to inform affected parties of data breaches.

GDPR is expansive and includes the following Chapters and articles:

Chapter 1: General Provisions

- Article 1: Subject matter and objectives

- Article 2: Material scope

- Article 3: Territorial scope

- Article 4: Definitions

Chapter 2: Principles

- Article 5: Principles relating to personal data processing

- Article 6: Lawfulness of processing

- Article 7: Conditions for consent

- Article 8: Conditions applicable to child's consent in relation to information society services

- Article 9: Processing of special categories of personal data

- Article 10: Processing of data relating to criminal convictions and offences

- Article 11: Processing which does not require identification

Chapter 3: Rights of the Data Subject

- Section 1: Transparency and Modalities

- Article 12: Transparent information, communication and modalities for the exercise of the rights of the data subject

- Section 2: Information and Access to Data

- Article 13: Information to be provided where personal data are collected from the data subject

- Article 14: Information to be provided where personal data have not been obtained from the data subject

- Article 15: Right of access by the data subject

- Section 3: Rectification and Erasure

- Article 16: Right to rectification

- Article 17: Right to erasure ('right to be forgotten')

- Article 18: Right to restriction of processing

- Article 19: Notification obligation regarding rectification or erasure of personal data or restriction of processing

- Article 20: Right to data portability

- Section 4: Right to object and automated individual decision making

- Article 21: Right to object

- Article 22: Automated individual decision-making, including profiling

- Section 5: Restrictions

- Article 23: Restrictions

Chapter 4: Controller and Processor

- Section 1: General Obligations

- Article 24: Responsibility of the controller

- Article 25: Data protection by design and by default

- Article 26: Joint controllers

- Article 27: Representatives of controllers not established in the Union

- Article 28: Processor

- Article 29: Processing under the authority of the controller or processor

- Article 30: Records of processing activities

- Article 31: Cooperation with the supervisory authority

- Section 2: Security of personal data

- Article 32: Security of processing

- Article 33: Notification of a personal data breach to the supervisory authority

- Article 34: Communication of a personal data breach to the data subject

- Section 3: Data protection impact assessment and prior consultation

- Article 35: Data protection impact assessment

- Article 36: Prior Consultation

- Section 4: Data protection officer

- Article 37: Designation of the data protection officer

- Article 38: Position of the data protection officer

- Article 39: Tasks of the data protection officer

- Section 5: Codes of conduct and certification

- Article 40: Codes of Conduct

- Article 41: Monitoring of approved codes of conduct

- Article 42: Certification

- Article 43: Certification Bodies

Chapter 5: Transfer of personal data to third countries of international organizations

- Article 44: General Principle for transfer

- Article 45: Transfers of the basis of an adequacy decision

- Article 46: Transfers subject to appropriate safeguards

- Article 47: Binding corporate rules

- Article 48: Transfers or disclosures not authorised by union law

- Article 49: Derogations for specific situations

- Article 50: International cooperation for the protection of personal data

Chapter 6: Independent Supervisory Authorities

- Section 1: Independent status

- Article 51: Supervisory Authority

- Article 52: Independence

- Article 53: General conditions for the members of the supervisory authority

- Article 54: Rules on the establishment of the supervisory Authority

- Section 2: Competence, Tasks, and Powers

- Article 55: Competence

- Article 56: Competence of the lead supervisory authority

- Article 57: Tasks

- Article 58: Powers

- Article 59: Activity Reports

Chapter 7: Co-operation and Consistency

- Section 1: Co-operation

- Article 60: Cooperation between the lead supervisory authority and the other supervisory authorities concerned

- Article 61: Mutual Assistance

- Article 62: Joint operations of supervisory authorities

- Section 2: Consistency

- Article 63: Consistency mechanism

- Article 64: Opinion of the Board

- Article 65: Dispute resolution by the Board

- Article 66: Urgency Procedure

- Article 67: Exchange of information

- Section 3: European Data Protection Board

- Article 68: European Data Protection Board

- Article 69: Independence

- Article 70: Tasks of the Board

- Article 71: Reports

- Article 72: Procedure

- Article 73: Chair

- Article 74: Tasks of the Chair

- Article 75: Secretariat

- Article 76: Confidentiality

Chapter 8: Remedies, Liability, and Sanctions

- Article 77: Right to lodge a complaint with a supervisory authority

- Article 78: Right to an effective judicial remedy against a supervisory authority

- Article 79: Right to an effective judicial remedy against a controller or processor

- Article 80: Representation of data subjects

- Article 81: Suspension of proceedings

- Article 82: Right to compensation and liability

- Article 83: General conditions for imposing administrative fines

- Article 84: Penalties

Chapter 9: Provisions relating to specific data processing situations

- Article 85: Processing and freedom of expression and information

- Article 86: Processing and public access to official documents

- Article 87: Processing of the national identification number

- Article 88: Processing in the context of employment

- Article 89: Safeguards and derogations relating to processing for archiving purposes in the public interest, scientific or historical research purposes or statistical purposes

- Article 90: Obligations of secrecy

- Article 91: Existing data protection rules of churches and religious associations

Chapter 10: Delegated Acts and Implementing Acts

- Article 92: Exercise of the delegation

- Article 93: Committee procedure

Chapter 11: Final provisions

- Article 94: Repeal of Directive 95/46/EC

- Article 95: Relationship with Directive 2002/58/EC

- Article 96: Relationship with previously concluded Agreements

- Article 97: Commission Reports

- Article 98: Review of other union legal acts on data protection

- Article 99: Entry intro force and application

Key Provisions of Article 32

Some of the key provisions of the GDPR, Article 32 require:

- the pseudonymisation and encryption of personal data;

- the ability to ensure the ongoing confidentiality, integrity, availability and resilience of processing systems and services;

- the ability to restore the availability and access to personal data in a timely manner in the event of a physical or technical incident;

- a process for regularly testing, assessing and evaluating the effectiveness of technical and organisational measures for ensuring the security of the processing.

Key Provisions of Article 34

Article 34 of the regulation details what an organization must do to avoid having to notify subjects in case of a breach.

- When the personal data breach is likely to result in a high risk to the rights and freedoms of natural persons, the controller shall communicate the personal data breach to the data subject without undue delay.

- The communication to the data subject referred to in paragraph 1 of this Article shall describe in clear and plain language the nature of the personal data breach ….

- The communication to the data subject referred to in paragraph 1 shall not be required if any of the following conditions are met:

- the controller has implemented appropriate technical and organisational protection measures, and those measures were applied to the personal data affected by the personal data breach, in particular those that render the personal data unintelligible to any person who is not authorised to access it, such as encryption;

- the controller has taken subsequent measures which ensure that the high risk to the rights and freedoms of data subjects referred to in paragraph 1 is no longer likely to materialise;

- it would involve disproportionate effort. In such a case, there shall instead be a public communication or similar measure whereby the data subjects are informed in an equally effective manner.

Related Articles

- General Data Protection Regulation (GDPR) Compliance

- Research and Whitepapers: Addressing Key Provisions of the General Data Protection Regulation (GDPR)

- eBooks: GDPR Compliance in Multi-cloud Environments

- Research and Whitepapers: Securosis: Cracking the Confusion: Encryption and Tokenization for Data Centers, Servers and Applications by Securosis

What Is Pseudonymisation?

Pseudonymisation is generally associated with the EU’s General Data Protection Regulation (GDPR), which calls for pseudonymisation to protect personally identifiable information (PII). According to “Article 4, Definitions” of the Agreed Upon Text of the GDPR:

'Pseudonymisation' means the processing of personal data in such a manner that the personal data can no longer be attributed to a specific data subject without the use of additional information, provided that such additional information is kept separately and is subject to technical and organisational measures to ensure that the personal data are not attributed to an identified or identifiable natural person.

Earlier the same document defines “personal data” and “data subject”:

'Personal data' means any information relating to an identified or identifiable natural person ('data subject'); an identifiable natural person is one who can be identified, directly or indirectly, in particular by reference to an identifier such as a name, an identification number, location data, an online identifier or to one or more factors specific to the physical, physiological, genetic, mental, economic, cultural or social identity of that natural person.

Related Articles

- General Data Protection Regulation (GDPR) Compliance

- Research and Whitepapers: Addressing Key Provisions of the General Data Protection Regulation (GDPR)

- eBooks: GDPR Compliance in Multi-cloud Environments

- Research and Whitepapers: Securosis: Cracking the Confusion: Encryption and Tokenization for Data Centers, Servers and Applications by Securosis

Why Does PCI DSS Matter?

PCI DSS stands for Payment Card Industry Data Security Standard. Protecting payment-related data is certainly important, but similar concerns about a much wider range of sensitive personal information — such as medical records, criminal backgrounds, and employment information — have elevated the issue of data protection, triggering numerous privacy laws and data-breach- disclosure obligations.

Compliance, of course, is mandatory. Failure to take the appropriate steps would at the very least damage your organization’s reputation and put the enterprise at a competitive disadvantage. Worse, if you experienced a data breach, you’d be hit by fines and accusations of negligence would come thick and fast. Those fines might be levied by the card brands themselves and/or your acquirer (the organization that processes transactions on your behalf and that might be responsible for vouching for your PCI DSS compliance to the payment card brands). You’d also face increased transaction fees and potential litigation.

Avoiding all this trouble makes it easy to see why complying with the PCI DSS is in your organization’s best interest. There’s another benefit: You can use many of the same technologies and processes you use to achieve PCI DSS compliance to protect a wide variety of data across your enterprise.

Note: This material is drawn from PCI Compliance & Data Protection for Dummies, Thales Limited Edition, by Ian Hermon and Peter Spier.

Related Articles

Why Should My Organization Maintain a Universal Data Security Standard, If It Is Subject to PCI DSS?

Account data can easily find its way into a wide variety of business systems, ranging from transaction processing to customer relationship management and added-value systems, such as loyalty and customer support. The challenge is that all these environments need to be protected to achieve compliance with the PCI DSS. As a result, this standard has a breadth and depth that far exceed those of other privacy and data security mandates. In fact, security experts tend to agree that it also well represents and aligns with industry best practices. Although some aspects of the standard may be new to your organization, it likely addresses areas of genuine risk.

The standard was designed to be applied consistently by all companies around the world, from one-man bands to huge multinational corporations. In practice, however, assessments also have to take legal, regulatory, and business requirements into account.

Note: This material is drawn from PCI Compliance & Data Protection for Dummies, Thales Limited Edition, by Ian Hermon and Peter Spier.

Related Articles

What Are the Core Requirements of PCI DSS?

The PCI DSS consists of 12 published requirements, which in turn contain multiple sub-requirements. The 12 PCI DSS compliance requirements are organized in six groups as shown in the table below:

PCI DSS Compliance Requirements

GroupRequirements

Build and Maintain a Secure NetworkRequirement 1: Install and maintain a firewall configuration to protect cardholder data.

Requirement 2: Do not use vendor-supplied defaults for system passwords and other security parameters.

Protect Cardholder DataRequirement 3: Protect stored cardholder data.

Requirement 4: Encrypt transmission of cardholder data across open, public networks.

Maintain a Vulnerability Management ProgramRequirement 5: Protect all systems against malware and regularly update antivirus software or programs.

Requirement 6: Develop and maintain secure systems and applications.

Implement Strong Access Control MeasuresRequirement 7: Restrict access to cardholder data by business need to know.

Requirement 8: Identify and authenticate access to system components.

Requirement 9: Restrict physical access to cardholder data.

Regularly Monitor and Test NetworksRequirement 10: Track and monitor all access to network resources and cardholder data.

Requirement 11: Regularly test security systems and processes.

Maintain an Information Security PolicyRequirement 12: Maintain a policy that addresses information security for all personnel.

Note: This material is drawn from PCI Compliance & Data Protection for Dummies, Thales Limited Edition, by Ian Hermon and Peter Spier.

Related Articles

Can I Use PCI DSS Principles to Protect Other Data?

To become PCI DSS compliant, you’re going to be investing a lot of time and money in building a secure infrastructure and supporting processes to meet PCI DSS security requirements. The PCI DSS is primarily concerned with the protection of cardholder data. What about all the other data that your company handles that has nothing to do with payments? Some of it may benefit from similar levels of protection.

By thinking beyond what you’re doing to meet PCI DSS requirements, you can leverage those security principles to build additional solutions that support your organization’s critical assets. You could do any of the following:

- Encrypt all the network traffic inside your organization to ensure that only those who need to see the data can do so.

- Protect all data at rest across your whole enterprise by using encryption and/or tokenization and ensuring that only those who are authorized to decrypt that data have access to it.

- Protect all sensitive data at the point of capture (the point at which it enters your organization) by encrypting selected fields in the data record.

- Keep security under your full control by encrypting data and managing the keys locally before sending data to any cloud service provider you use.

- Implement a layered security approach so that your infrastructure doesn’t have a single vulnerable point of attack, which makes it much more difficult for an attacker (inside or outside your organization) to gain unauthorized access to your data.

If you adopt a security-conscious approach to all data and to data access within your organization, meeting the specific PCI DSS requirements is much simpler.

Note: This material is drawn from PCI Compliance & Data Protection for Dummies, Thales Limited Edition, by Ian Hermon and Peter Spier.

Related Articles

How Can I Protect Stored Payment Cardholder Data (PCI DSS Requirement 3)?

At the heart of the PCI DSS is the need to protect any cardholder data that you store. The standard provides examples of suitable card holder data protection methods, such as encryption, tokenization, truncation, masking, and hashing. By using one or more of these protection methods, you can effectively make stolen data unusable.

Protecting stored data isn’t a “one size fits all” concept. You should think of PCI DSS Requirement 3 as being the minimum level of security that you should implement to make life as difficult as possible for potential attackers.

Knowing the data storage rules

You need to know all locations where data is stored (a big incentive to minimize your data footprint). Requirement 3 also provides guidance about which data can — and can’t — be stored. One of the best pieces of advice in this requirement is “If you don’t need it, don’t store it.”

Making stored data unreadable

The PCI DSS standard requires you to render a primary account number (PAN) unreadable anywhere it’s stored, including portable storage media, backup devices, and even audit logs (which are often overlooked). The deliberate use of the word unreadable by the PCI Security Standards Council allows the council to avoid mandating any particular technology, which in turn futureproofs the requirements. Despite this fact, Requirement 3.4 provides several options:

- One-way hashes based on strong cryptography in which the entire PAN must be hashed

- Truncation, which stores a segment of the PAN (not to exceed the first six and last four digits)

- Tokenization, which stores a substitute or proxy for the PAN rather than the PAN itself

- Strong cryptography underpinned by key management processes and security procedures

Managing keys securely

Whatever approach you intend to use to render your stored data unreadable, you need to secure the associated cryptographic keys. Strong encryption is useless, if it’s coupled with a weak key management process. The standard provides detailed guidance on managing keys — guidance that’s significantly similar to the way banks and other financial institutions are required to secure their cryptographic keys. Additional requirements call on you to fully document the way you implement and manage various keys throughout their life cycles.

Your success in managing keys depends on having good cryptographic key custodians: people you trust who won’t collude to attack your systems. These people are required to formally acknowledge that they understand and accept their key-custodian responsibilities.

Also, you must ensure that security policies and operational procedures for protecting stored cardholder data are documented, used, and known to all affected parties within your organization.

Don’t underestimate the critical importance of strong key management, and don’t try to take shortcuts. Your Qualified Security Assessor will find your errors, and attackers may find them too.

Masking the PAN before displaying

The standard provides some very specific advice regarding the display of a PAN: Display the full range of digits (normally, 16) only to those personnel who must view it for business reasons. In all other cases, you must implement masking to ensure that no more than the first six digits and the last four digits of the PAN are displayed.

Note: This material is drawn from PCI Compliance & Data Protection for Dummies, Thales Limited Edition, by Ian Hermon and Peter Spier. Please refer to it for more detail on these topics.

Related Articles

How Can I Encrypt Account Data in Transit (PCI DSS Requirement 4)?

Sensitive data is quite vulnerable when it’s transmitted over open networks, including the Internet, public or otherwise untrusted wireless networks, and cellular networks. The PCI Security Standards Council takes a very hard line on data in transit, requiring the use of trusted keys/certificates, secure transport protocols, and strong encryption. The council also assigns you the ongoing task of reviewing your security protocols to ensure that they conform to industry best practices for secure communications.

Blocking eavesdroppers

Many potential attackers are eavesdroppers who are trying to exploit known security weaknesses. The PCI DSS includes specific requirements and guidance on establishing connections to other systems:

- Proceed only when you have trusted keys/certificates in place. You’re expected to validate these keys and/or certificates and to make sure that they haven’t expired.

- Configure your systems to use only secure protocols, and don’t accept connection requests from systems using weaker protocols or inadequate encryption key lengths.

- Implement strong PCI DSS encryption for authentication and transmission over wireless networks that transmit card-holder data or that are connected to the cardholder data environment.

Securing end-user messaging

Much of the PCI DSS focuses on protecting PANs. Requirement 4 sets forth some specific rules about transmitting PANs across open networks. As a result, technologies that your organization normally uses (such as end-user messaging technologies) may need to be adapted, replaced, or discontinued when cardholder data is being transmitted. The main constraints of Requirement 4 are as follows:

- PANs must never be sent unprotected over commercial technologies such as email, instant-messaging, and chat applications.

- Before using any of these end-user technologies, you must ensure that PANs have been rendered unreadable via strong cryptography.

- If a third party requests a PAN, that third party must provide a tool or method to protect the PAN, or you must render the number unreadable before transmission.

When you encrypt cardholder data as part of your network communications process, you must define the appropriate security policies and operational procedures. In addition, you must make sure that the relevant documents are kept up to date, made available to, and followed by all relevant people in your organization.

Note: This material is drawn from PCI Compliance & Data Protection for Dummies, Thales Limited Edition, by Ian Hermon and Peter Spier.

Related Articles

How Can I Restrict Access to Cardholder Data (PCI DSS Requirement 7)?

A considerable portion of the PCI DSS concerns access control mechanisms, which must be sufficiently robust and comprehensive to deliver the protection required for cardholder data.

Requirement 7 of PSS DSS clearly states that you must restrict data access. You have to ensure that critical data can be accessed only by authorized personnel and that you have the appropriate systems and processes in place to limit access based on business needs and job responsibilities. The requirement also calls for you to immediately remove access when access is no longer needed.

Try to keep the number of people who need access to data to the absolute minimum, with access needs identified and documented according to defined roles and responsibilities.

Managing your access policy

The standard requires you to think very carefully about who in your organization has access to system components and the effect of that access on the security of your cardholder data environment. This task becomes much more complex if you have multiple office locations or data centers, or if you use cloud-based service providers to host some of your data.

You’re required to manage your access control policy at quite a granular level, carefully defining the various user roles in your organization (user, administrator, and so on) and specifying which parts of your system and data they can access.

In practice, you need to implement sufficient controls to create a practical, effective access control policy, so spend sufficient planning time to devise the best mechanism to satisfy your needs.

Assigning “least privilege” access rights

The standard is prescriptive in that it forces you to grant “least privilege” access rights to all user accounts with requests for access documented and approved. The logic is that you grant each person only enough access to the various bits of the system or data he or she needs to perform his or her job functions. An administrator, for example, could define an access policy for another user to view the cardholder data, but she herself wouldn’t be able to read the data directly.

Depending on your environment, you may need to address multiple system types and varying levels of access for network, host, and application-level use and administration. This task can prove to be complex when, for example, you need to give multiple types of users different access rights to your databases.

It’s best to disable access to data by default and then enable any access that’s required. This method makes it easier to prevent access-granting mistakes that could lead (in the worst-case scenario) to a data breach.

Revoking data access

When a user has a change of role internally, document the change, and modify that user’s privileges as appropriate. Similarly, when a user leaves your company, you need to document the change and then disable or delete his or her user account in alignment with your organization’s policy and procedure.

An established, consistent process can help ensure strong privilege management. In addition, Thales recommends that you periodically run queries on user accounts to verify account activity. You might run a scheduled script on a quarterly basis, for example.

Note: This material is drawn from PCI Compliance & Data Protection for Dummies, Thales Limited Edition, by Ian Hermon and Peter Spier.

Related Articles

How Can I Authenticate Access to System Components (PCI DSS Requirement 8)?

Strong security is essential for protecting your systems and data from unauthorized access. Requirement 8 of the PCI DSS contains many elements that you need to address in your access control and password policies for staff members and third parties alike.

Ensuring individual accountability

It’s important to ensure that every user (internal or external) who needs access to your systems has a unique identifier so that no dispute occurs later about who performed a particular task. (For details on handling nonrepudiation, for example, see PCI DSS Requirement 8.1.) Strict enforcement of unique identifiers for each user inherently prevents the use of group-based or shared identities (see PCI DSS Requirements 8.1.5 and 8.5).

You also need to ensure full accountability whenever new users are added, existing credentials are modified, or the accounts of users who no longer need access are deleted or disabled. This accountability includes revoking access immediately for a terminated user, such as an employee who has just left your company (see PCI DSS Requirements 7.1.4 and 8.1.2).

Making access management flexible

Having a compliant user access policy is all well and good, but that policy takes you only part of the way to compliance with the PCI DSS. You’re required to underpin your user access policy with an access management system that spells out various tasks, such as the following:

- Restricting data access by third parties (such as vendors that require remote access to service or support your systems). Grant access only when those parties need it, and monitor their use of your system. Never offer unrestricted 24/7 access.

- Locking out users who make multiple unsuccessful login attempts over a specified period (to make automated password attacks more difficult).

- Making the system unavailable to any user after a specified period of inactivity and requiring a repeat login to continue (to minimize the risk of impersonation).

- Enforcing multifactor authentication methods (normally, tokens or smart cards) for people who attempt non-console administrative or remote access to cardholder-data-environment system components. This enhanced security approach raises the bar for attackers.

Beefing up authentication

For all types of access, the standard expects a strong authentication system. The standard also provides details on implementing and managing this authentication system. In the case of passwords, for example, PCI DSS Requirement 8.2 directs you to do the following:

- Use strong cryptography to render all authentication credentials (such as passwords or passphrases) unreadable during transmission and storage on all system components, thereby devaluing data where it’s most vulnerable to an insider attack.

- Set strict conditions for passwords. As a fundamental requirement, all passwords must be changed every 90 days as a minimum. You must enforce a minimum of seven alphanumeric characters for any given password. The reuse of previous passwords must be prohibited.

- Supply an initial password to each new user, and require her to change that password the first time she accesses your system.

- prohibit group shared passwords.

After you establish an authentication policy, provide it to all users to help them understand and follow the requirements.

Note: This material is drawn from PCI Compliance & Data Protection for Dummies, Thales Limited Edition, by Ian Hermon and Peter Spier.

Related Articles

How Can I Monitor Access to Cardholder Data (PCI DSS Requirement 10)?

If you don’t have precise details on how and when your data is being accessed, updated, or deleted, you’ll struggle to identify attacks on your systems. Also, you’ll have insufficient information to investigate if something goes wrong, especially after a data breach.

Fortunately, PCI DSS Requirement 10 calls for keeping, monitoring, and retaining comprehensive audit logs.

Maintaining audit trails

The standard mandates that certain activities — especially reading, writing, or modifying data (see PCI DSS Requirement 10.2) — be recorded in automated audit trails for all system components. These components include external-facing technologies and security systems, such as firewalls, intrusion-detection and intrusion-prevention systems, and authentication servers.

In addition, the standard describes how to record specific details so that you know the who, what, where, when, and how of all data accesses. Any root or administrator user access, for example, should be logged, especially when a privileged user escalates his privileges before attempting data access.

PCI DSS Requirement 10.4 also calls for all cardholder data environment system components to be configured to receive accurate time-synchronization data. If you don’t already have this capability, you may need to upgrade your systems.

One important piece of information to log is any failed access attempt — a good indicator of a brute-force attack or sustained guessing of passwords, especially if the access log has lots of entries. You must also record additions and deletions, such as increased access rights, lower authentication constraints, temporary disabling of logs, and software substitution (which could be a sign of malware).

Preventing unauthorized modification of logs

After you create your audit logs, you must ensure that the logs are secured in such a way that they can’t be altered. You must use a centralized PCI DSS logging solution (see PCI DSS Requirement 10.5.3) with restricted access and sufficient capacity to retain at least 90 days’ worth of log data from all system components within the cardholder data environment, with the remainder of a full year available for restoration if needed.

Making regular security reviews

As well as ensuring that required details are generated, centrally stored, and secured against unauthorized access or modification, you must monitor your logs and security events on at least a daily basis, with alerts requiring review at any time of day or night (see PCI DSS Requirements 10.6 and 12.10.3). This requirement helps you identify anomalies and suspicious activity.

Thales recommends you consider implementing a centralized logging solution that accounts for future capacity and includes reporting tools.

Note: This material is drawn from PCI Compliance & Data Protection for Dummies, Thales Limited Edition, by Ian Hermon and Peter Spier.

Related Articles

How Can I Make Stored PAN Information Unreadable?

Following are some of the most popular methods for rendering stored information — especially primary account numbers (PANs) — unreadable.

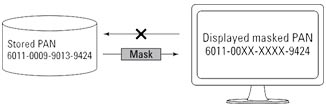

Masking

Masking relates to maintaining the confidentiality of data when it’s presented to a person. The process is familiar to anyone who has used a payment card in a restaurant or shop and then checked the printed receipt; certain digits of the PAN are shown as Xs rather than the actual digits (see figure below). Per PCI DSS Requirement 3.3, PAN display should be limited to the minimum number of digits necessary to perform job functions and should not exceed the first six and last four digits.

Masking a PAN for display purposes.

Source: Thales

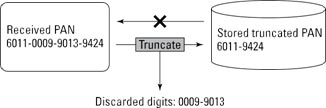

Truncation

Truncation renders stored data unreadable by ensuring that only a subset of the complete PAN is stored. As in masking, no more than the first six and last four digits can be stored.

Truncating a PAN

Source: Thales

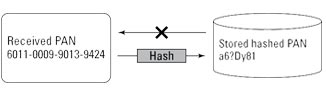

One-Way Hashing

A hash function is a well‑defined, provably secure cryptographic process that converts an arbitrary block of data (in this case, a PAN) to a different, unique string of data. In other words, every PAN yields a different result. The one‑way hash process is irreversible (which is why it’s called one-way); it’s commonly used to ensure that data hasn’t been modified, because any changes in the original block of data would result in a different hash value.

The figure below illustrates the use of the hash function in the context of the PCI DSS. The technique provides confidentiality (it’s impossible to re‑create a PAN from a hashed version of that PAN), but like truncation, it makes using the stored data for subsequent transactions impossible.

One-way hash of a PAN

Source: Thales

You can’t retain truncated and hashed versions of the same payment card within your cardholder data environment unless you implement additional controls to ensure that the two versions can’t be correlated to reconstruct the PAN.

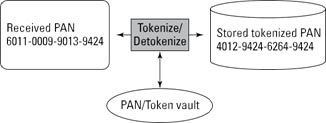

Tokenization